Ashutosh Saxena, CEO of TorqueAGI and a pioneer in robotics and AI, sits down with Jiquan Ngiam, Co-Founder and CEO of Lutra, for a deep dive into the evolving landscape of AI agents and how they communicate.

As AI continues to evolve, the complexity of building systems that can seamlessly communicate, adapt, and operate at scale is at the forefront of innovation. One such breakthrough is the Model Concept Protocol (MCP). This powerful approach enables AI agents to communicate directly with applications and data sources, paving the way for more efficient, collaborative, and intelligent robotics ecosystems.MCP is an open standard pioneered by Anthropic and now widely embraced across the ecosystem including OpenAI, Google, Microsoft, etc.

In this conversation, we explore the potential of MCP and its transformative role in the robotics industry. We discuss the challenges of productionizing enterprise-ready agents, balancing security with speed, and how these innovations are revolutionizing the way companies approach enterprise applications and automation.

Understanding Lutra: Pioneering Innovation in Agentic AI for Enterprises

Ashutosh Saxena: Can you explain what Lutra does and how your work is pushing the boundaries of enterprise applications and AI to application communication?

Jiquan Ngiam: When ChatGPT first came out, we saw the power of these models to reason and write code. Our core mission at Lutra is to give these models their "arms and legs" from a software perspective. We want to give them the ability to connect to data sources and take action.

When you use Lutra, it takes natural language instructions and converts them into snippets of software code. It's almost like a loop:

- The model takes an action

- Observes the world

- Then takes another action until the task is complete.

The Model Concept Protocol (MCP) is the backbone of this connection. We noticed that before MCP, you had to build the entire agent from end to end. Now, thanks to MCP, any agent can talk to any kind of backend system. This is what's allowing us to see a lot of adoption. Enterprises have a long tail of things they want the AI to work with.

For example, creating Jira tickets from a meeting transcript is a manual and annoying task. With this new world of interoperable agents and MCP, you can now connect your favorite data system to your favorite front end, whether it's GPT, Claude, or Slack.

The Model Concept Protocol (MCP): Revolutionizing AI Agent Communication

Ashutosh Saxena: Could you provide an in-depth explanation of the Model Concept Protocol (MCP) and its significance in the enterprise AI landscape? How is MCP different from existing communication protocols, and how are you implementing it at Lutra to enhance the capabilities of autonomous systems in real-world applications?

Jiquan Ngiam: Traditionally, connecting two software systems meant hiring an engineer to write custom code. But with AI, the model itself can read and implement. We realized there's a big difference in designing an app for a developer versus designing one for an AI. If a developer uses a wrong email address, the API might just give an obscure error code. But when you design for an AI using MCP, the system should say, "that didn't work. Here's what you can do to make it work." This allows the AI to automatically fix itself and continue with the task.

MCP is also different in that it can be designed to handle longer latencies. An API may need to return a response in milliseconds, but an AI tool might run for a few seconds, calling multiple APIs behind the scenes and retrying. All these design parameters are subtly different for AI systems. The protocol is an open standard that is evolving rapidly, the protocol’s ongoing development and community contributions can be found on this GitHub repository. It goes beyond simple function calls to include documentation for AI, which might include example prompts. The newest evolution, MCP UI, even provides instructions on how to render a user interface for the AI.

The Future of Autonomous Agents: Transforming Industries with MCP

Ashutosh Saxena: MCP could transform and increase the abilities of agents. How do you see the future of autonomous agents evolving in industries like robotics? What role does MCP play in shaping that future, particularly in scenarios where different physical agents need to coordinate?

Jiquan Ngiam: I think we are seeing a lot of multi-agent and sub-agent systems. You can have agents that are very specialized, each with their own prompts, tools, and perception. Then there's an orchestrator agent that figures out the overall goal.

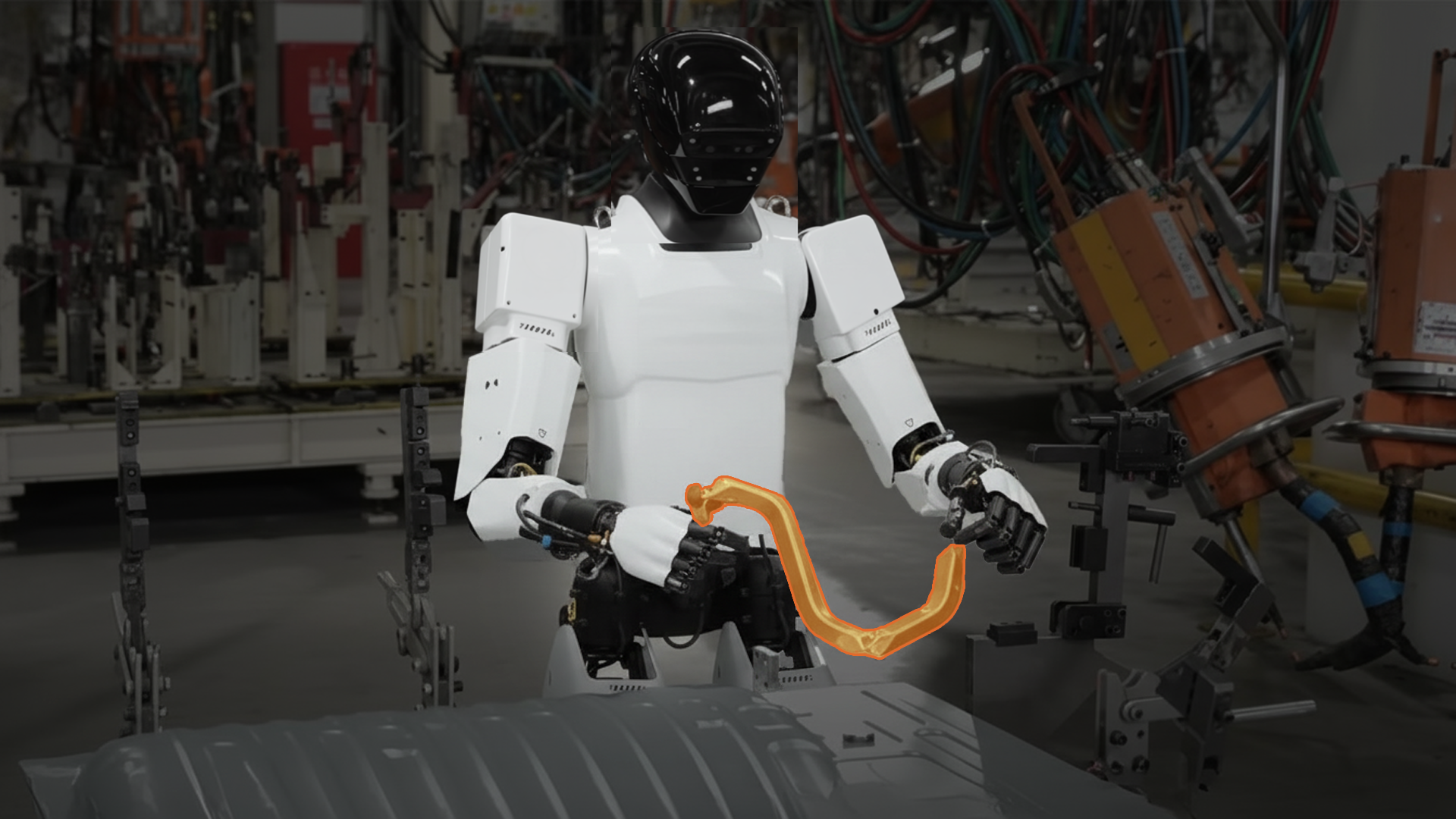

Ashutosh Saxena: Currently, in robotics, the modules – e.g., detector, trajectory planner – communicate with each other on manually defined interfaces. In the future, as the traditional software modules are replaced by agents, do you see that MCP could become the way the robotics agents talk to each other?

Jiquan Ngiam: This is a very similar paradigm to what we see in robotics, where different components need to communicate. I could see MCP being used to have a "walking agent" talk to a "manipulation agent" for whole-body control. It's a way of separating specific AI models and orchestrating their actions, and it's a paradigm we're seeing come to bear in our space.

Reflections on Multimodal AI: Insights and Challenges

Ashutosh Saxena: Looking back at your work on deep learning, startups, and Google, what were some of the toughest challenges you faced? How have those lessons shaped your current work at Lutra?

Jiquan Ngiam: The most interesting part of multimodal AI is that deep learning used to be very single-modality. You would train a vision model, a language model, and then try to stitch them together. The transformer architecture with its token-based approach has allowed us to handle different signals—audio, video, image, code—all in a very elegant way. They all get tokenized and then embedded into a shared space for reasoning.

This has changed how we work at Lutra. For example, when you give an AI agent a command to build a website, it's often hard to express a visual change like "move that button to the left a bit" with just text. It’s much easier to provide a screenshot. With MCP, an agent can now access a web browser, take a screenshot of its own work, bring that visual information back into its context, and update the code accordingly. This creates a powerful feedback loop for the agent, which can now handle multimodal information and is a key element for effective orchestration.

Ashutosh Saxena: Multimodal AI is central to robotics. Robotics data varies from data from sensors, cameras & radars to behavioral plans and control policies. Spatio-temporal context and understanding geometry is important for AI.

Enterprise-Ready AI: Challenges in Building Reliable Agents

Ashutosh Saxena: As you work to make agents "enterprise-ready," what are the most significant challenges you've encountered in terms of productionization? How do you ensure scalability and reliability in enterprise environments?

Jiquan Ngiam: Going from a demo to a system you can truly rely on is a huge challenge. We think about this in terms of observability, governance, and enablement.

- First, you need observability—you have to know what the agent is doing. Is it doing something you don't want it to do?

- This leads to governance, which means putting guardrails around the system. You need to be able to prevent the agent from going rogue and deleting all your Jira tickets, for instance.

- Finally, there's enablement. Once you have a secure, reliable system, how do you make it easy for enterprises to integrate more and more tools?

We built MintMCP to provide this kind of secure, governed infrastructure. It allows us to help enterprises get more value from their agents and deploy them reliably, ensuring things like proper access control and logging. The reality is, if an agent goes rogue and deletes all your Jira tickets, that's probably not a good thing.

Ashutosh Saxena: That's really interesting because we see strong parallels with our robotics customers. On one hand, just like an AI needs connectors and access tokens to get started, a robot needs to have a connection to work on the edge. This whole access and integration is a key challenge.

The second one is guardrails. For critical use cases, they are extremely important. We can't have a robot hallucinate and jump off a cliff, so that kind of testing and interpretability is a core focus for any enterprise AI, but even more so for TorqueAGI.

The good news is that with generative AI, the models have powerful generalization abilities. We're seeing that you don't need a huge dataset to get started anymore. The problems we're focused on now are more about how to deploy, govern, and secure these agents, which is a great place to be.

The Road Ahead: How MCP is Shaping the Future of Autonomous Robotics and Industry Transformation

As the landscape of robotics continues to evolve, the Model Concept Protocol (MCP) represents a crucial step forward in enabling seamless, agent-to-agent communication that is vital for building more autonomous, collaborative, and intelligent systems.

Jiquan Ngiam’s insights into the practical challenges of productionizing enterprise-ready robots highlight the complexity of making these innovations scalable, reliable, and secure in real-world applications. By addressing the key needs for faster development cycles, secure and safe operation, and robust inter-agent communication, MCP promises to revolutionize industries from manufacturing to healthcare.

The discussion also reflects how foundational research, such as Ngiam’s work in multimodal deep learning, shapes the trajectory of current advancements in robotics, bringing us closer to realizing truly autonomous systems that can adapt and function in dynamic environments.

Looking ahead, MCP’s potential to transform the way robots interact and operate will undoubtedly drive the future of robotics, empowering industries to operate more efficiently and securely at scale.

Whether you’re dealing with dynamic environments, moving objects, or difficult weather conditions, TorqueAGI is ready to add even more intelligence to your robotic stack. Contact us to schedule a demo and discover how we can assist you.