How To Build

Physical AI

The Physical AI Blueprint: Bringing Intelligence from Lab to Real World

This blueprint is for those building the next generation of intelligent robots, where advances in AI open the door to a $10 trillion market opportunity.

This moment marks a turning point — but only if we can construct reliable AI that truly captures the uniqueness of the physical world. Physical AI is the intelligence that lets machines see, reason, and act safely in dynamic environments. It is a very different challenge from mastering tokens in the cloud. It must perform in messy, unpredictable conditions where safety, reliability, and real-time responsiveness define success. AI has shown promise in controlled labs, but the complexity and variability of real-world physics quickly expose its limits.

Some are attempting to close this gap by repeating the playbook of digital AI, simply feeding more data to larger models. But robots do not have the luxury of infinite datasets or years to learn from trial and error. Physical AI is not about brute-force data; it is about scaling by design. Like constructing a skyscraper governed by the laws of physics, you cannot just stack more bricks; you need blueprints, foundations, and integrated systems that allow the entire structure to stand and operate safely.

As we overcome these challenges, we will see robots move beyond labs and demos to operate reliably across warehouses, fields, factories, hospitals, and homes.

Why Robots, Why Now?

The case for robotics has never been stronger. Labor shortages, aging populations, and supply chain fragility are straining industries worldwide. In sectors such as agriculture, logistics, manufacturing, and construction, the gap between available labor and operational demand continues to widen, making automation an economic necessity rather than a luxury. Robots now offer precision, safety, and resilience where human capacity is limited — from harvesting crops to handling packages and performing repetitive, physically demanding work.

At the same time, the convergence of affordable hardware, AI-driven software, and specialized compute has made this moment fundamentally different from past automation cycles. Just as mobile devices and cloud infrastructure unlocked the app economy, the fusion of robotics and AI is creating the foundation for a new era of intelligent, adaptive work.

Why Current Robotics Is Stuck — and Why Robots Need Physical AI

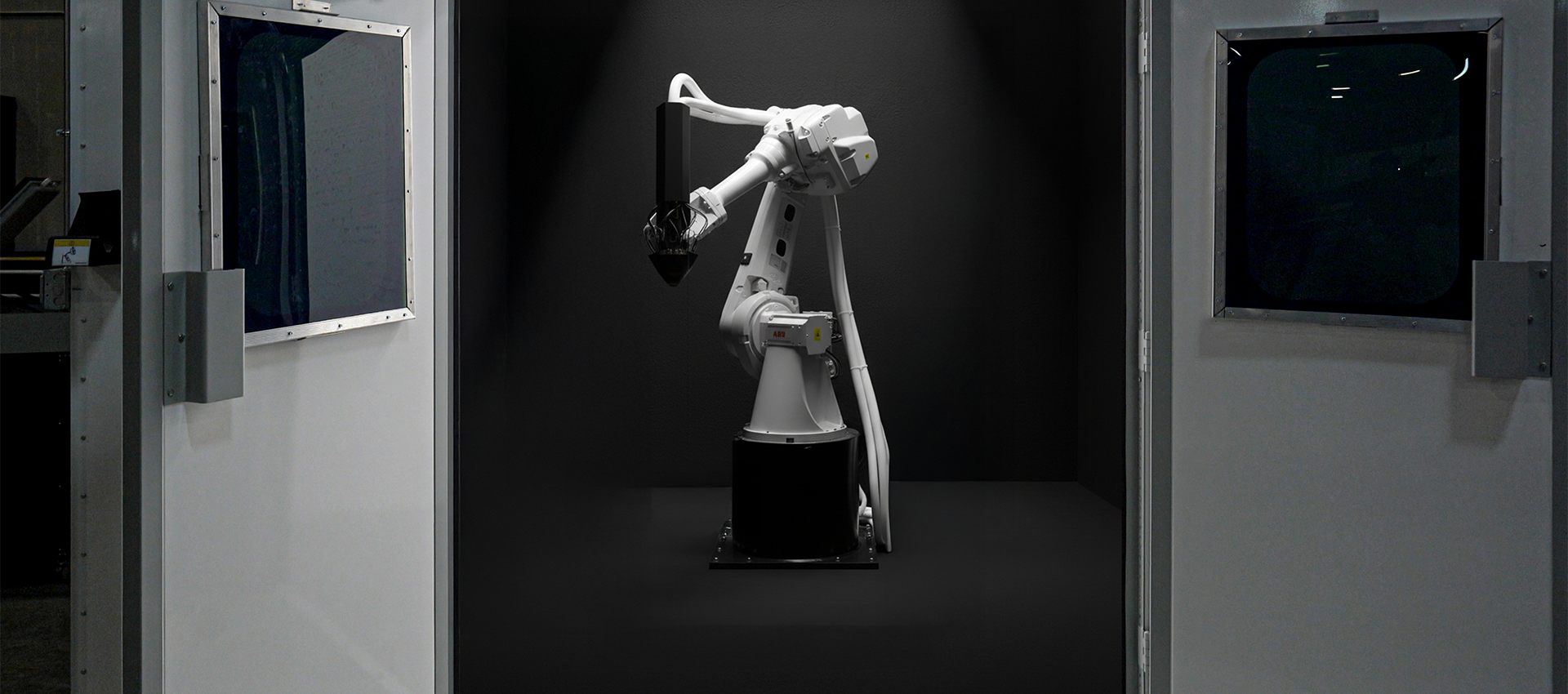

Most robots still work only in controlled environments. They perform well when conditions match a script but fail when reality shifts—dust, reflections, or moving people quickly expose brittleness. A 99 percent-accurate model may look impressive in a demo, yet a single failure in perception or control can halt production or cause damage.

Traditional AI systems are task-specific and depend on massive, costly data collection cycles. They function as isolated components in a larger software stack, limiting adaptability. Recent vision–language–action models show promise by grounding perception, reasoning, and control in one space, allowing robots to generalize across tasks. But to make them performant, many try to replicate the brute-force scaling that worked for digital AI—forgetting that it was built on decades of human-curated online data.

Physical AI demands a different path: achieving reliability and performance without hyperbolic data needs.

Core Requirements for Physical AI

Building Physical AI requires more than larger datasets or faster GPUs. It demands systems that are engineered for reliability, data efficiency, and physics-grounded understanding — the three foundations of deployable intelligence.

Reliability and Interpretability

In the physical world, even small errors have consequences. Robots must not only perform correctly but do so predictably and explainably. Each decision — a grasp, a turn, a stop — must be auditable, measurable, and consistent across conditions. Reliability is not a metric; it is a design constraint.

Escaping the Data Mirage

Robots cannot depend on endless field trials or billion-dollar data pipelines. Every real-world example carries cost, time, and risk. Physical AI must learn efficiently — finding structure and causality from limited experience rather than brute-force scale. True progress lies not in collecting more, but in understanding better.

Physics as Intelligence

The physical world follows structure and causality — gravity, friction, and motion govern how everything interacts. Physical AI embeds these principles directly into its models, giving robots built-in priors about how the world behaves. This reduces data needs, improves reliability, and allows them to reason from understanding rather than memorization.

By turning physics into a foundation for learning, we move from imitation to true intelligence — and toward a blueprint for building it at scale.

Building Physical AI: The Blueprint

Building Physical AI is both a scientific and engineering challenge. It requires models that can understand the world through physics, learn efficiently from limited data, and adapt seamlessly across tasks.

This section outlines how deployable intelligence is actually built — from multimodal foundations to compositional reasoning systems.

Part 1 — The Technology Blueprint: How Intelligence Is Built

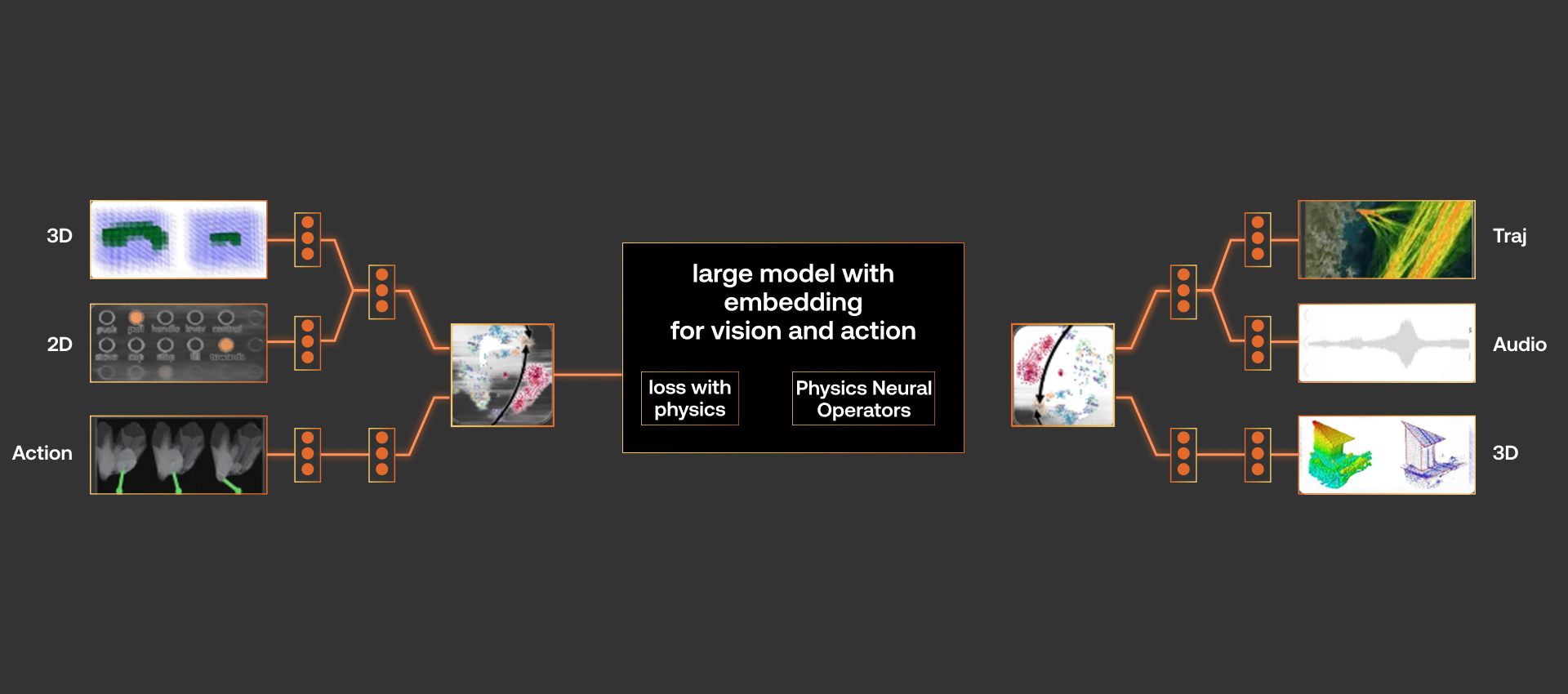

1. Unified Models that See, Think, and Act

At the core of Physical AI are Vision-Language-Action (VLA) models — multimodal generalist agents that unify perception, reasoning, and control into a shared intelligence. Instead of treating vision, planning, and motion as separate modules, VLAs connect them within a common representation space. This allows robots to interpret complex scenes, reason about goals, and act with contextual awareness.

However, current VLAs often struggle with interpretability and modularity, making integration into robotic systems difficult. They also face replication and data-intensity challenges, as scaling these models requires vast and impractical real-world datasets — a gap Physical AI must bridge.

2. Physics-Informed Learning: The Shortcut to Real-World Intelligence

Physical AI learns from structured experience rather than brute-force scale. When physics is embedded directly into the model and agent architecture, robots gain an innate understanding of how the world behaves — geometry, motion, force, and contact. This grounding allows them to infer and adapt without millions of examples, achieving orders of magnitude faster learning and stronger generalization across environments.

The outcome is a unified world model that not only learns efficiently but also transfers reliably from simulation to reality.

3. Compositional Intelligence: Thin Agents, Shared Understanding

A single model can’t reliably handle every robotic task. Instead, Physical AI should use thin agents — perception, planning, or control modules — that interface with a shared physics-informed core model. These agents contribute specialized reasoning while relying on the same underlying knowledge of the world.

Through this architecture, new capabilities can be plugged in modularly without retraining the entire system. Agents communicate via shared protocols, coordinating actions in real time while preserving interpretability and consistency.

This design scales intelligence by composition, not complexity — maintaining a stable, physics-grounded core that supports innovation at the edges.

Part 2 — The Infrastructure Blueprint: How Intelligence Is Scaled

Deploying Physical AI is more than making smart models — it’s about building the systems that let those models live, learn, and adapt in the wild. Infrastructure ensures that intelligence doesn’t stay in the lab but becomes resilient, real-time, and scalable.

1. Training-to-Inference Co-Design: Intelligence That Distills Across Robotic Platforms

The goal of Physical AI is not to design for the cloud, but for the robot.

Modern architectures now make it possible to distill intelligence naturally across platforms — from large multimodal models trained in the datacenter to compact, real-time agents on edge hardware. This co-design ensures the same core intelligence can scale up for learning and scale down for action without losing context or fidelity.

Platforms such as NVIDIA Orin and NVIDIA Thor bring datacenter-class AI performance to the edge, enabling robots to think and act locally with low latency, high safety, and full autonomy.

2. Real-Time Runtime and Resilient Agents

Once deployed, robots operate in unpredictable environments where every millisecond matters. Infrastructure must support deterministic control loops, adaptive latency, and graceful recovery from failures.

Modern Physical AI systems are increasingly GPU-first, running perception, planning, and control in parallel instead of the serial CPU pipelines common in traditional ROS stacks. This shift reduces latency and enables richer, continuous sensing and reasoning at the edge. To sustain reliability, agents must manage GPU workloads, fall back safely to CPU when needed, and stay stable under heat or compute stress.

3. Continuous Learning Pipelines Built for Adaptation

In Physical AI, scalability is no longer about data volume but about how feedback flows. Real progress comes from adaptive pipelines that connect each robot’s experience directly to its underlying model.

Each robot should both act and learn, refining intelligence through its own operation. A well-designed model-to-agent architecture lets small but important feedback update the shared model and propagate improvements across the fleet with minimal retraining.

Scalability now means responsiveness—the ability for robots to learn collectively and improve continuously from focused, high-value experience rather than from mountains of data.

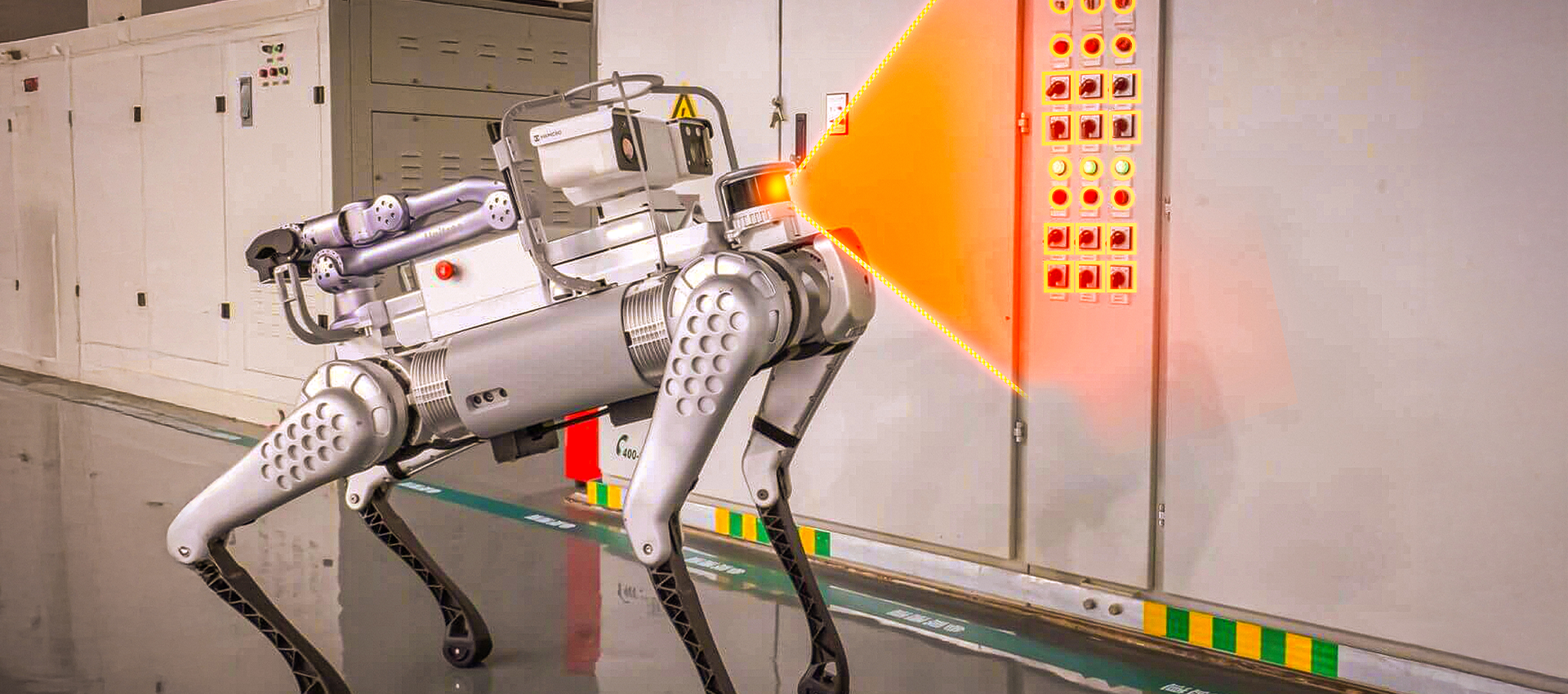

Applications of Physical AI: From Demos to Deployment

The world has seen enough robotic demos — including the kind made to work beautifully for one video and never again. The difference between a humanoid stage clip and a deployable system is not polish but persistence — intelligence that scales.

Physical AI makes that possible. It turns single-shot performances into sustained, self-improving autonomy — robots that learn, adapt, and endure in the wild. The following examples show where that shift is already taking shape.

Warehouses and Logistics

Warehouses are filled with automation, yet most systems are brittle — fixed paths, pre-defined boxes, little flexibility. Physical AI makes automation adaptive. Robots learn to handle damaged or irregular packages, re-route safely around humans, and recover from uncertainty without human intervention. The result is not just faster throughput, but reliability that meets industrial uptime requirements.

Agriculture

Farms face rising costs, erratic yields, and shrinking labor pools. Robots can already see plants and move through fields — but without Physical AI, they fail when lighting, weather, or terrain changes. Embedding physics and adaptive learning enables machines to recognize crops through dust, track growth stages, and harvest with centimeter precision. Physical AI turns seasonal prototypes into year-round agricultural systems that improve with every cycle.

Construction and Heavy Machinery

Construction sites remain among the least automated environments because no two projects are alike. Robots that rely on static scripts break down in this variability. Physical AI enables machines to understand geometry, material behavior, and context, allowing them to assist in finishing, inspection, and logistics even as conditions shift daily. The same model that drives a polishing robot can guide an excavator — learning from physics, not memorization.

Healthcare and Service Robotics

Hospitals and care facilities demand reliability, not novelty. Robots must navigate crowded hallways, deliver supplies, and assist staff safely around people. Physical AI brings situational awareness and context sensitivity, enabling systems that operate day and night in dynamic, human-centered spaces — moving beyond pilot programs to become trusted infrastructure.

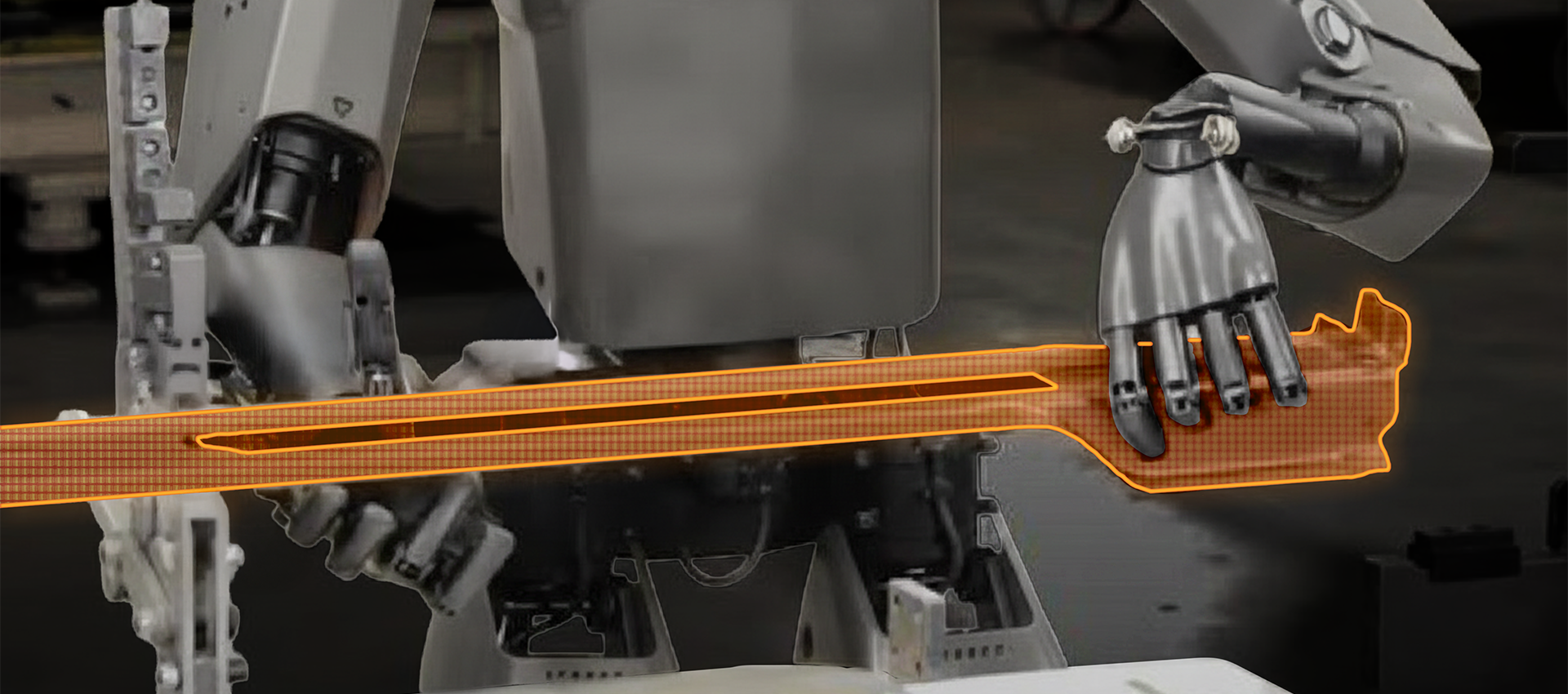

Manufacturing and Assembly

On factory floors, the challenge is not just precision but adaptation. Traditional automation handles uniform inputs; Physical AI extends it to unstructured tasks — assembly of variable parts, inspection under glare, or handling deformable materials. By reasoning through cause and effect, robots can correct themselves mid-process, reducing downtime and human oversight.

The Blueprint is a $10 Trillion Market

Physical AI is not an incremental upgrade to robotics. It is the architecture for intelligence to step off the screen and into the world. It demands models grounded in physics, systems built for reliability, and infrastructure designed for real-world scale.

The opportunity is vast. Across logistics, agriculture, healthcare, construction, manufacturing, and beyond, trillions in value lie waiting for machines that can operate dependably, adaptively, and at scale. Those who master Physical AI first will not just build robots. They will redefine industries and create the foundation of a $10 trillion economy.

The path forward is clear. Invest in modular intelligence, embed physics into models, and design for deployment from day one. For CEOs, CTOs, and builders, this is the moment to lead, to move beyond demos and define the next era of intelligent machines.

Resources for Builders

- Transparency & Accountability in Generative AI for Robotics (Ransalu Senanayake)

- Inside the VLAM Stack: Building Generalist Agents (Honglak Lee)

- Generative AI in Robotics: Doing More with Less Data

- Infrastructure of Large-Scale AI (Aditya Jami)

- Model Concept Protocol (Jiquan Ngiam)

- Designing Multimodal Robots for an Unstructured World (Kofi Asante)

- Generative AI for Robotics: From Demos to Deployment

- Forbes: From Words To Work: Generative AI’s Leap Into The Physical World